Hi 👋, I’m Lang (/læŋ/).

I am currently a first-year PhD student at MBZUAI, a great place for research. I’m fortunate to be supervised by Dr. Xiuying Chen, an outstanding rising star and a truly supportive mentor. I am also co-supervised by the excellent professor Prof. Preslav Nakov.

Previously, I got my bachelor’s degree in Computer Science and Technology from Huazhong University of Science and Technology ( HUST ) in 2025.

💡 Interests

-

Mechanistic Interpretability (MI): To know the mechanistic reasons why foundation models can do something and cannot do something, and how to make them do something by utilizing its nature, with the goal of making them more interpretable, controllable, and trustworthy.

(Recently, I am particularly into the geometrical features of latent spaces and ways to escape from Linear Representation Hypothesis.)

-

Trustworthy AI (secondary): Explore the reliable application of AI.

I warmly welcome all kinds of collaborations, especially on topics related to interpretability and trustworthy AI. If you are interested, feel free to reach out and start a conversation!

📝 Selected Publications

Please refer to my Google Scholar for full list of publications.

🧑🔬 Mechanistic Interpretability

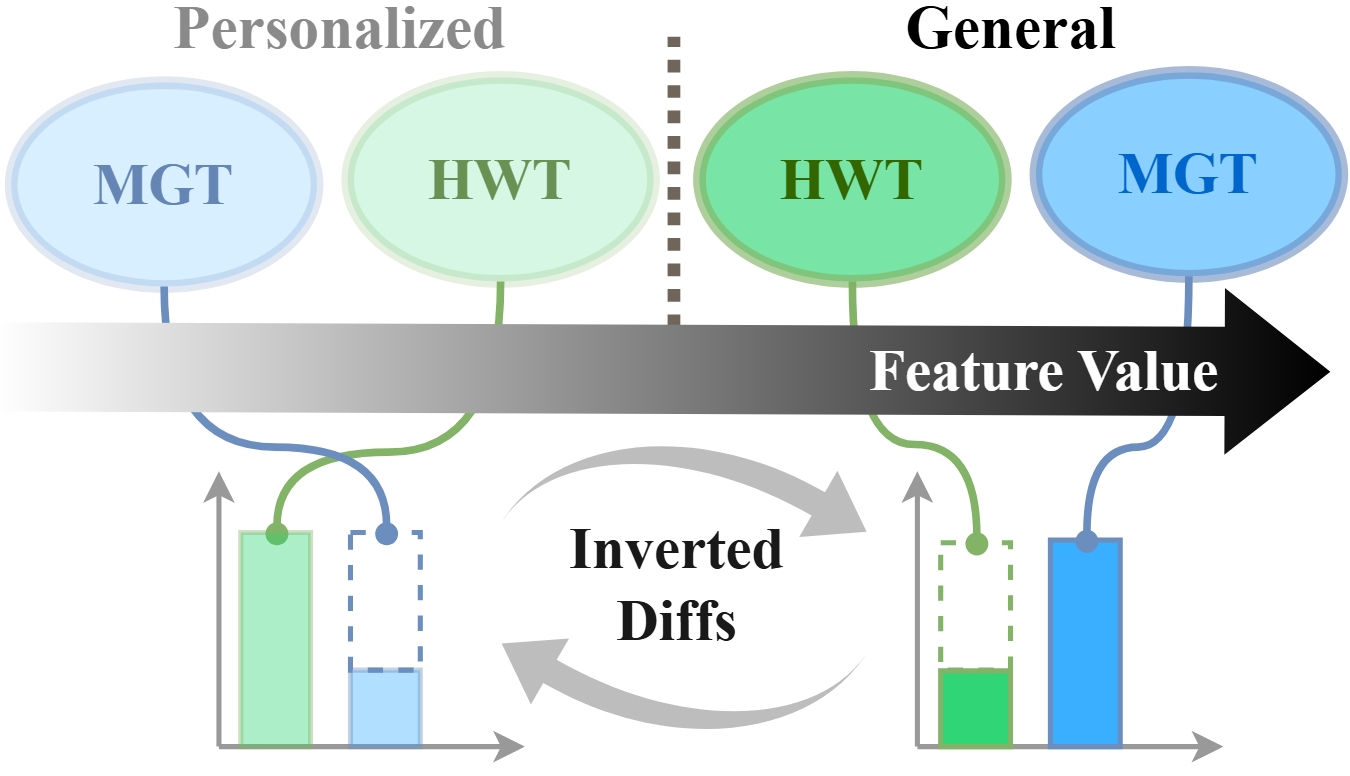

When Personalization Tricks Detectors: The Feature-Inversion Trap in Machine-Generated Text Detection

Lang Gao, Xuhui Li, Chenxi Wang, Mingzhe Li, Wei Liu, Zirui Song, Jinghui Zhang, Rui Yan, Preslav Nakov, and Xiuying Chen

“What if I say your AI detector can still have high AUC in random tokens? The first work on revealing the weak transferability of machine-generated text detectors in personalized contents, and its mechanistic interpretation.”

| Paper | Data & Code |

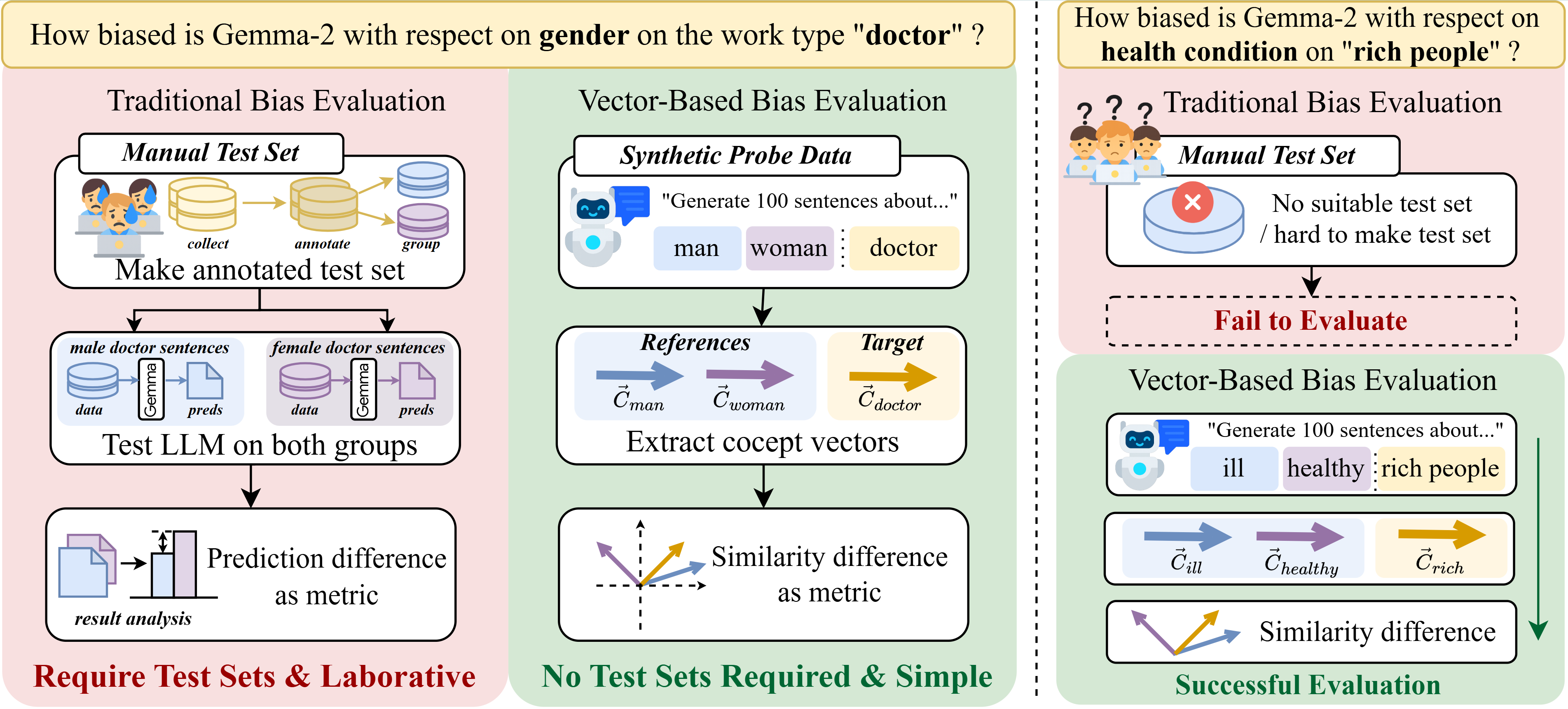

Evaluate Bias without Manual Test Sets: A Concept Representation Perspective for LLMs

Shaping the Safety Boundaries: Understanding and Defending Against Jailbreaks in Large Language Models

Lang Gao, Jiahui Geng, Xiangliang Zhang, Preslav Nakov, and Xiuying Chen

“Try to interpret common mechanisms of diverse LLM jailbreak attacks in the activation space and propose an efficient defense method.”

| Paper | MBZUAI News |

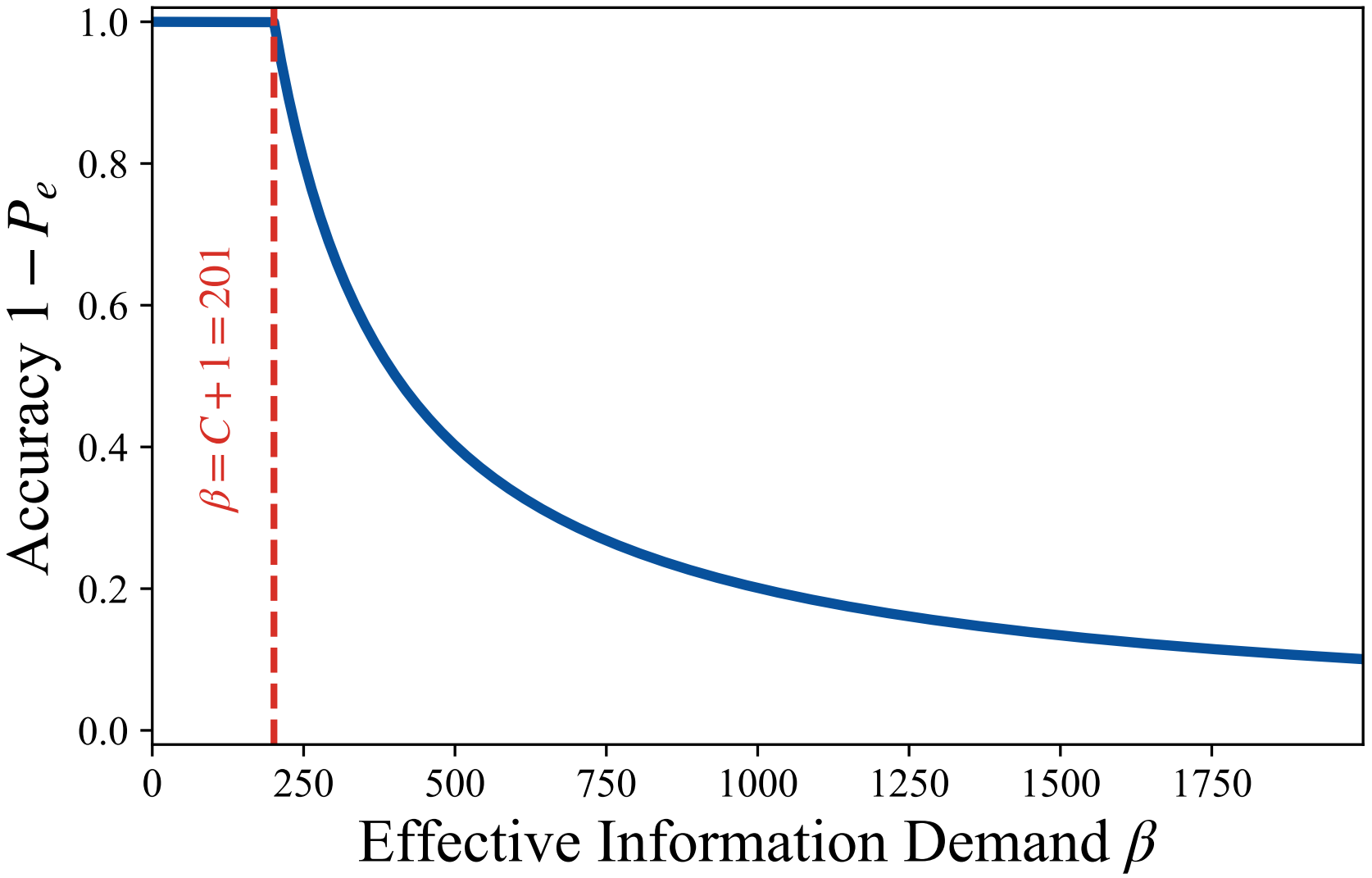

A Fano-Style Accuracy Upper Bound for LLM Single-Pass Reasoning in Multi-Hop QA

Kaiyang Wan, Lang Gao, Honglin Mu, Preslav Nakov, Yuxia Wang, Xiuying Chen

“LLM’s accuracy will have a severe performance breakdown once the required information exceeds its internal capacity in complex multi-hop reasoning scenarios.”

| Paper | Code | MIT Tech Review CN |

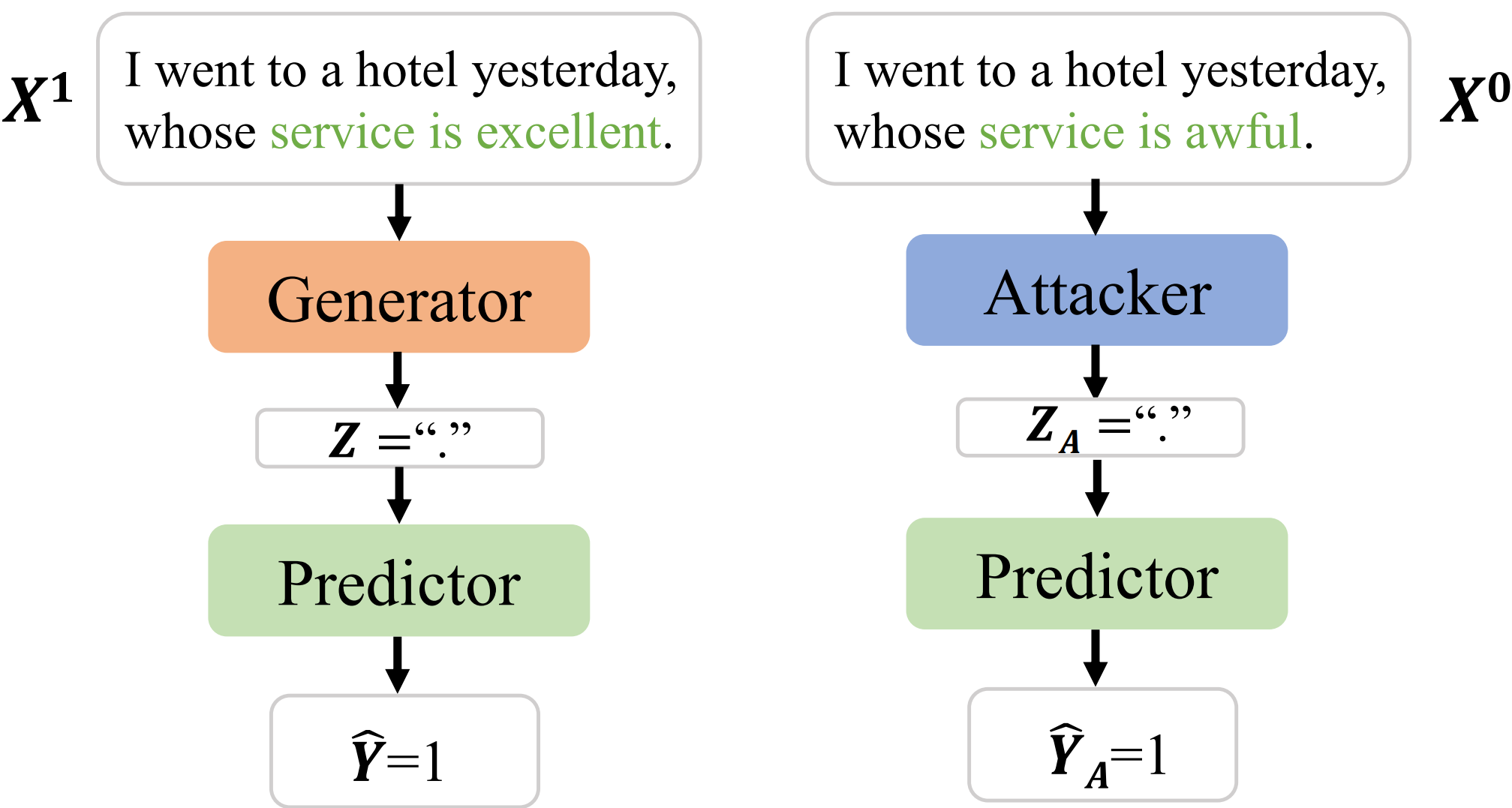

Adversarial Cooperative Rationalization: The Risk of Spurious Correlations in Even Clean Datasets

👨🔧 Applications

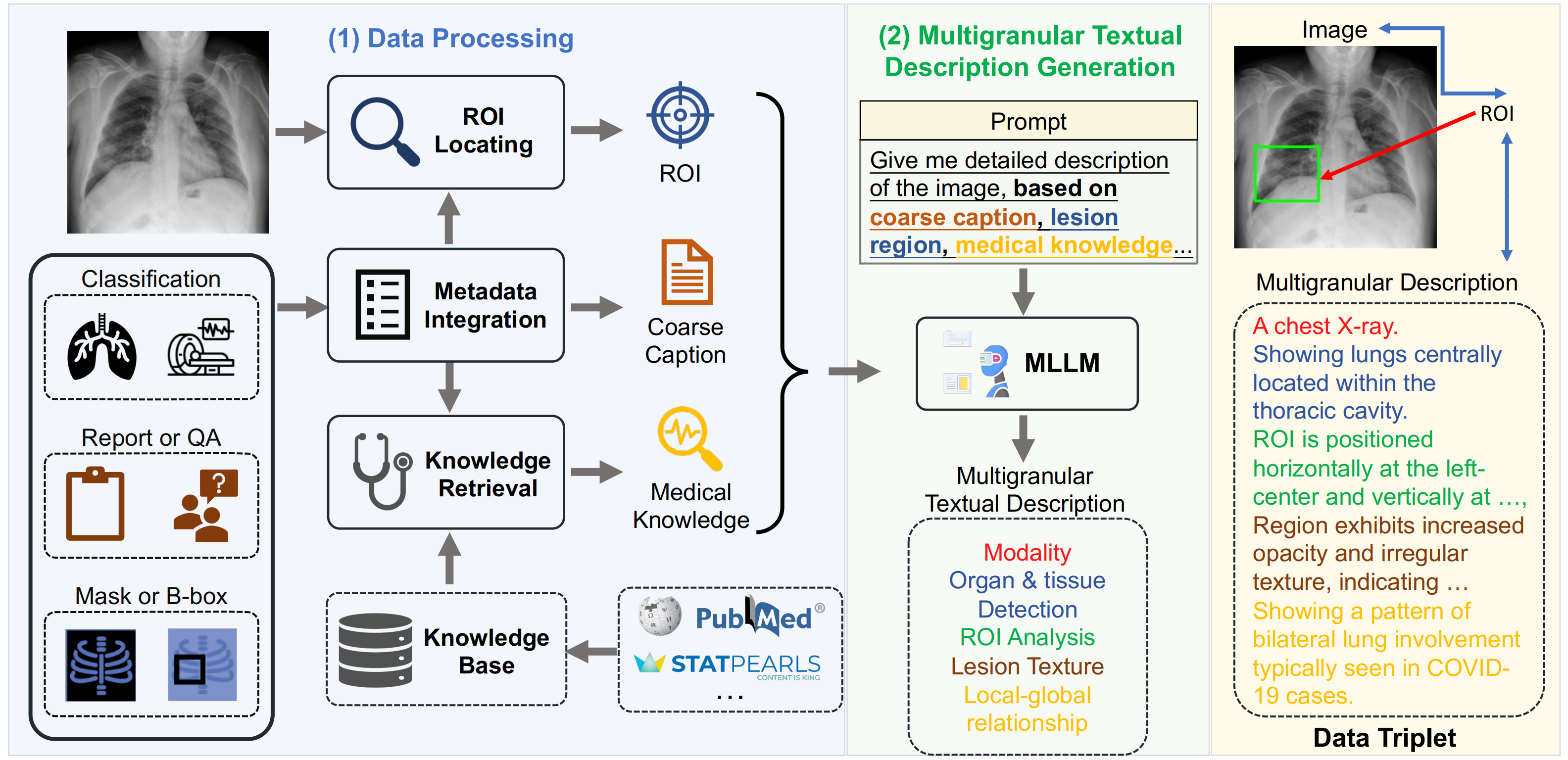

MedTrinity-25M: A Large-scale Multimodal Dataset with Multigranular Annotations for Medicine

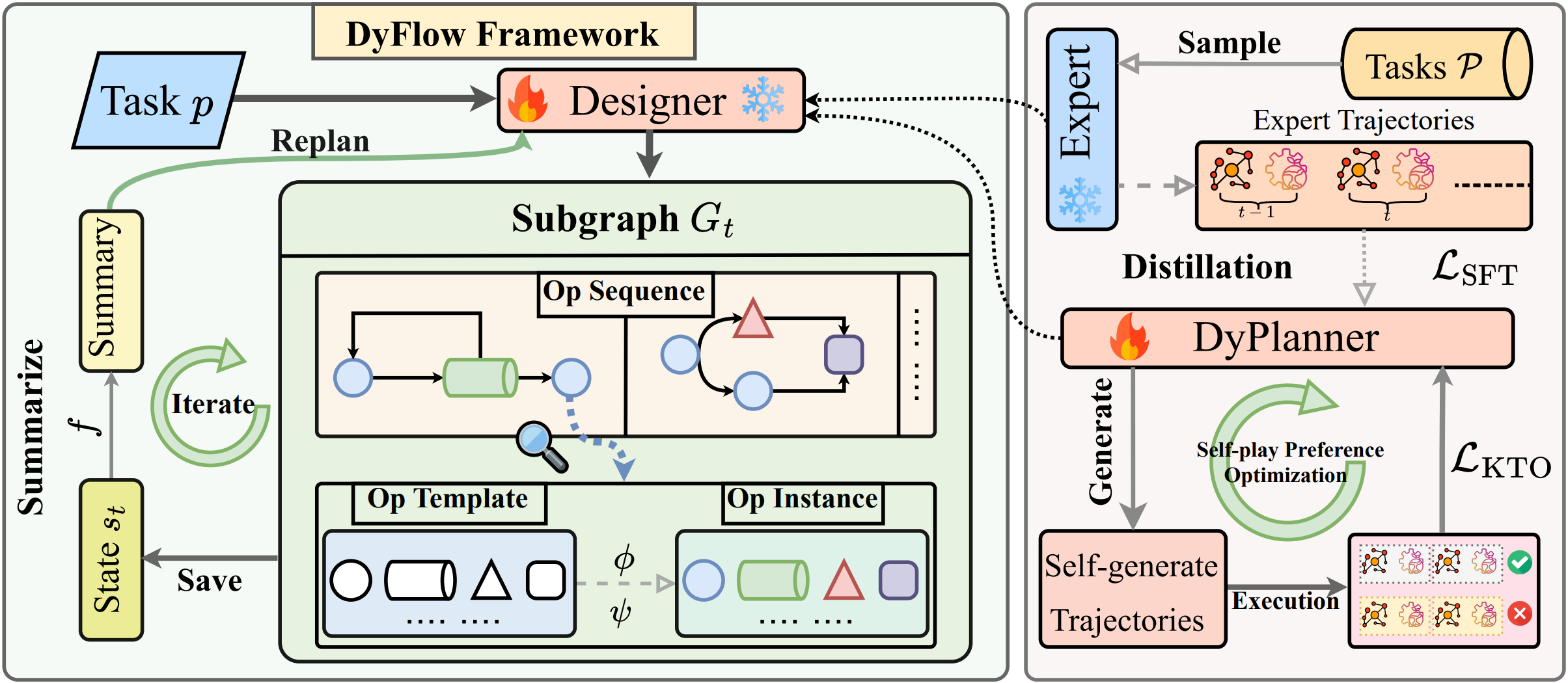

DyFlow: Dynamic Workflow Framework for Agentic Reasoning

Yanbo Wang, Zixiang Xu, Yue Huang, Xiangqi Wang, Zirui Song, Lang Gao, Chenxi Wang, Xiangru Tang, Yue Zhao, Arman Cohan, Xiangliang Zhang, and Xiuying Chen

“DyFlow is a dynamic workflow framework for LLM-based agents, that adapts its reasoning steps in real-time using intermediate feedback, enabling better generalisation across diverse tasks.”

| Paper | Code |

🧐 Service

- 2025, Reviewer: ACL, EMNLP, NLPCC.

💼 Experiences

- [10 / 2024 - 07 / 2025 ]

MBZUAI, Research Intern (Supervisor: Dr. Xiuying Chen, topic: Mechanistic Interpretability of LLMs)

MBZUAI, Research Intern (Supervisor: Dr. Xiuying Chen, topic: Mechanistic Interpretability of LLMs) - [07 / 2024 - 10 / 2024]

University of Notre Dame, Research Intern (Supervisor: Prof. Xiangliang Zhang, topic: LLMs for Bayesian Optimization)

University of Notre Dame, Research Intern (Supervisor: Prof. Xiangliang Zhang, topic: LLMs for Bayesian Optimization) - [01 / 2024 - 06 / 2024]

UC Santa Cruz, Research Intern (Supervisor: Dr. Yuyin Zhou, topic: Visual-Language models for healthcare)

UC Santa Cruz, Research Intern (Supervisor: Dr. Yuyin Zhou, topic: Visual-Language models for healthcare) - [10 / 2023 - 12 / 2023]

HUST (Supervisor: Prof. Ruixuan Li, topic: Interpretable deep learning frameworks)

HUST (Supervisor: Prof. Ruixuan Li, topic: Interpretable deep learning frameworks)

💬 I am deeply grateful to all the mentors and collaborators who have guided and supported me along the way. Your encouragement, trust, and inspiration have made all the difference in my journey.

📖 Educations

08 / 2025 - Now : Ph.D. student,  Mohamed bin Zayed University of Artificial Intelligence

Mohamed bin Zayed University of Artificial Intelligence

09 / 2021 - 07 / 2025 : B.E.,  Huazhong University of Science and Technology

Huazhong University of Science and Technology

🧩Miscellaneous

📚 Resources

Insights

- Book: Interpretability in Deep Learning [Link]

- Book: Interpretable Machine Learning [Link]

- Book: Trustworthy Machine Learning [Link]

- Book: 大语言模型 (The Chinese Book for Large Language Models) [Link]

- Article: The Bitter Lesson [Link]

- Article: The Urgency of Interpretability [Link]

Blogs

- [05/24] [Chinese] National Undergraduate Innovation Project Documentation. [Link]

- [03/24] [Chinese] Negative Transfer. [Link]

- [03/24] [Chinese] Mixture of Experts Explained. [Link]

- [01/24] [Chinese] EMNLP2020 Tutorial Notes (Topic: Explainable AI). [Link]

Other Stuff

I also like photography. Sometimes I take good photos by accident. So I might upload a few here someday, along with some unnecessary commentary, but feel free to pretend you’re looking forward to it.🙃